· marketing · 5 min read

Controversial Moz Strategies: Are SEO Tools Making Us Lazy?

A debate-centric look at whether SEO tools like Moz are accelerating results - or eroding our craft. Learn when to trust automation, where creativity matters most, and a practical checklist to keep tools from turning you into a cookie-cutter optimizer.

Outcome first: by the end of this article you’ll have a practical framework to keep Moz and other SEO tools working for you - not the other way around. You’ll be able to triage opportunities quickly, spot when a metric is misleading, and run experiments that rebuild real SEO intuition.

Why that matters right now? Because everyone has access to the same dashboards. The playing field looks level. But true advantage still comes from the thinking behind the metrics.

The rise of SEO tools - helpful, addictive, inevitable

Tools like Moz, Ahrefs, SEMrush and others let us do in minutes what used to take teams days. They surface keywords, map links, estimate traffic and score pages. They automate repetitive work. They scale discovery.

That’s the upside. But automation always has a trade-off: speed for depth. Use the tools right and you accelerate good decisions. Use them as substitutes for thinking and you make the same mistakes faster.

What’s at stake

- Short-term wins vs long-term learning. Quick fixes can mask gaps in skill.

- Creativity and differentiated strategy. Copyable outputs create copycat campaigns.

- Misinterpreting correlation as causation. A high score doesn’t prove impact.

The consequence: teams can become efficient at executing average ideas, not effective at discovering exceptional ones.

What Moz (and similar tools) actually do well

- Keyword discovery and grouping (Moz Keyword Explorer).

- Competitive backlink analysis (Link Explorer).

- On-page insights and audits (On-Page Grader / Site Crawl).

- Prioritization signals (keyword difficulty, estimated volume, spam scores).

Use them as triage: find where to look. They are excellent at turning noise into a short list of hypotheses to test.

Refer to official docs for fundamentals: Moz Learning Center and real data sources like Google Search Console and Google Search Central.

Where tools make us lazy - concrete examples

Over-trusting proprietary metrics.

- Domain Authority (DA) is a helpful relative indicator, not a Google ranking signal. Treat DA as directional, not prescriptive. See Moz’s explanation of DA: Domain Authority: Moz Learn.

Relying on tool-generated keyword lists without SERP inspection.

- Keyword volume and difficulty are estimates. They don’t reveal intent, SERP features, or the nuance of real queries. Manual SERP analysis reveals intent shifts and content gaps tools may miss.

Letting automation define content structure.

- When people follow canned headings from keyword tools, content converges. The result - thin, uninspired pages that rank for nothing significant.

Outbound link outreach at scale without personalization.

- Templates are efficient. They’re also easy to ignore. High-volume outreach often means low reply rates and lower-quality links.

Ignoring analytics and user behavior.

- Tools show potential. Real impact shows up in Search Console, Analytics and on-site engagement. If you ignore those, you’re optimizing ghosts.

For more on where metrics mislead, read coverage on how to interpret authority metrics: Search Engine Journal - Domain Authority explained.

The hidden cost: erosion of fundamentals

When teams outsource pattern recognition to tools they stop training it. Junior SEOs see a score, not a problem. They learn to chase numbers rather than understand users.

There’s a well-documented automation paradox in other fields: automating tasks reduces hands-on practice, which atrophies skill. The same applies here. The tool makes you fast. It doesn’t make you right.

How to use Moz without becoming lazy - a practical framework

Use tools to generate hypotheses, not to make final recommendations.

- Example - Keyword Explorer suggests a cluster. Your job: inspect the SERP, read top-ranking pages, map user intent, then decide whether that cluster matters.

Always validate with primary data.

- Cross-check Moz signals with Google Search Console impressions, clicks and real queries. Merge with GA4 engagement metrics and server logs.

Run small experiments with measurable outcomes.

- A/B test title tags and meta descriptions where possible. Track position changes and CTR lift in Search Console.

Create a “no-tool” audit cadence.

- Once per quarter, pick 5 pages and audit them without dashboards - read the content, search the keyword manually, click competitors, and note user experience issues.

Train interpretation, not just operation.

- Teach teams what each Moz metric means and doesn’t mean. Train them to ask - “If we improve this metric, how will user behavior or revenue change?”

Personalize outreach.

- Use templates to scale but write the first sentence by hand. Demonstrate value before asking for a link.

Prioritize creative bets.

- Reserve budget/time each month for contrarian or experimental content that wouldn’t surface in standard keyword reports.

A sample day-to-day workflow that resists complacency

- Morning - quick Moz crawl and keyword report to surface anomalies.

- Midday - manual SERP review for top 5 opportunities. Read competitor pages.

- Afternoon - run an experiment, adjust copy, or perform a qualitative user test.

- Weekly - compare Moz suggestions with Search Console trends. Flag mismatches for analysis.

This hybrid flow keeps the tool in a supporting role.

Team and process tweaks to keep creativity healthy

- Hire for curiosity, not just Excel skills.

- Reward experiments and learnings, not just ranking increases.

- Insist on human-approved outputs for all automated recommendations.

- Run post-mortems on failed tactics to separate tool error from strategic error.

Metrics to be skeptical of (and how to interpret them)

- Domain Authority - directional, not causal.

- Keyword Difficulty scores - useful for prioritization but opaque. Combine with manual SERP signals.

- Estimated traffic - rough order-of-magnitude only. Validate with real clicks from Search Console.

When in doubt, ask: “If this number changes, how will the business change?”

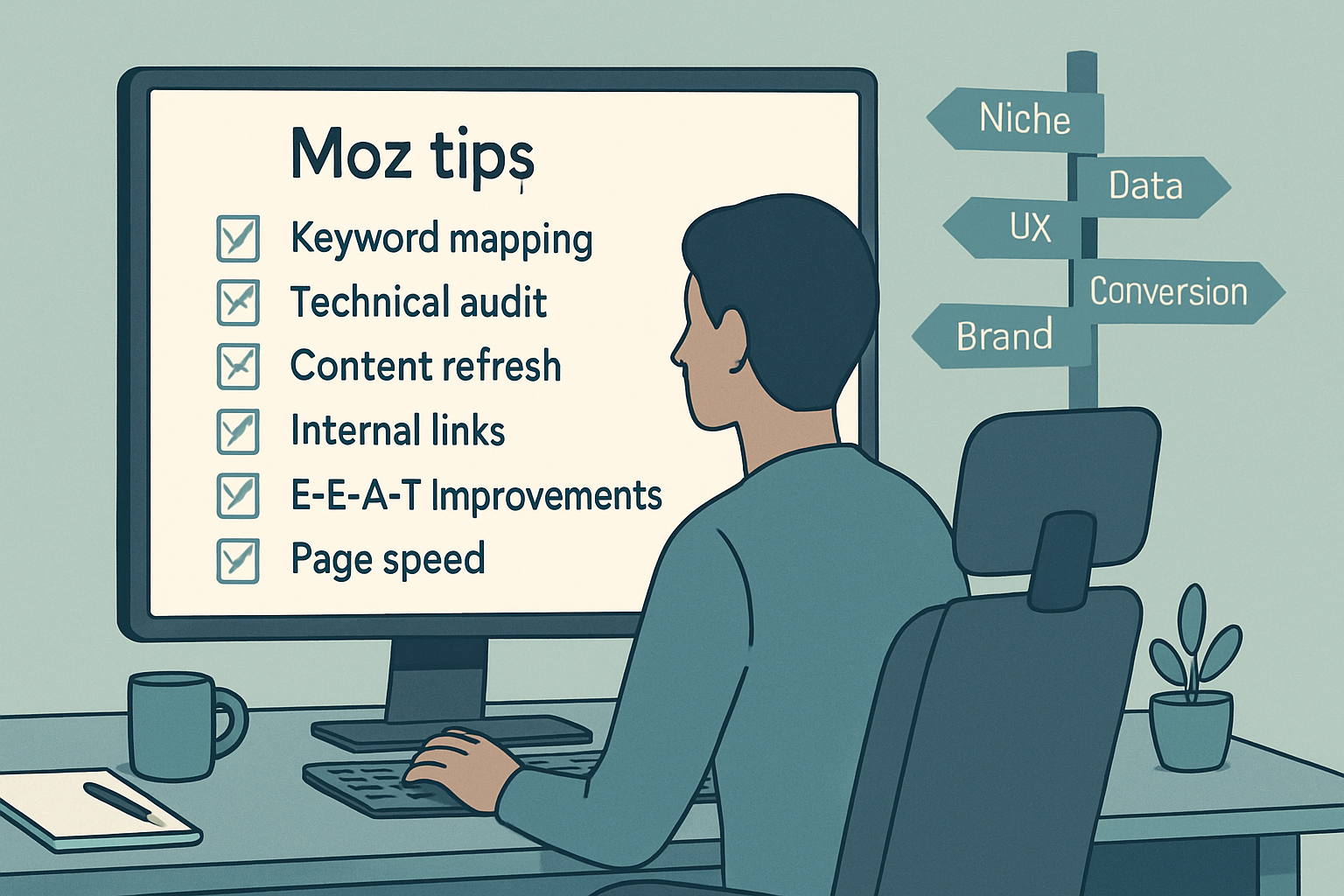

Final checklist - keep Moz as an accelerator, not a crutch

- Use tools to find opportunities, not to finalize strategy.

- Validate tool insights with Search Console / analytics.

- Manually inspect SERPs before creating content.

- Run experiments and measure outcomes.

- Keep a calendar of ‘no-tool’ audits.

- Train team members on metric meaning and limits.

- Allocate time for creative, contrarian content.

Conclusion

SEO tools like Moz are powerful accelerators. They surface opportunities, remove grunt work, and help scale discovery. But when teams hand over thinking to dashboards they shrink their tactical imagination and weaken core judgment.

Use Moz to get smart fast. Then, get your hands dirty. Read pages. Talk to users. Run experiments. Make decisions where the tool is silent.

Tools don’t make us lazy. We do - when we let convenience replace curiosity, and automation replace apprenticeship. Choose otherwise.